Difference between revisions of "Iris Segmentation Code Based on the GST"

| Line 54: | Line 54: | ||

'''The code outputs the following information of the input iris image''': | '''The code outputs the following information of the input iris image''': | ||

| − | * Segmentation circles of the iris region (inner and outer | + | * Segmentation circles of the iris region (inner and outer circle) as well as eyelids (in the form of a straight line) |

* Irregular (non-circular) iris boundaries fitted by active contours | * Irregular (non-circular) iris boundaries fitted by active contours | ||

| − | * Estimated eye center (computed at the beginning and used to | + | * Estimated eye center (computed at the beginning and used to assist in the segmentation) |

* Intermediate images after contrast normalization, specular reflection removal, and eyelash removal | * Intermediate images after contrast normalization, specular reflection removal, and eyelash removal | ||

* Complex edge map of the input image | * Complex edge map of the input image | ||

| Line 69: | Line 69: | ||

Certain parameters of the code are customizable, please read the documentation included with the code for more information. | Certain parameters of the code are customizable, please read the documentation included with the code for more information. | ||

| + | |||

| + | '''Please remember to cite reference [1] ([http://islab.hh.se/mediawiki/index.php/Iris_Segmentation_Code_Based_on_the_GST#References below]) if you make use of this code in any publication'''. | ||

By downloading the code, you agree with the terms and conditions indicated above. | By downloading the code, you agree with the terms and conditions indicated above. | ||

| Line 82: | Line 84: | ||

=='''References'''== | =='''References'''== | ||

| − | |||

| − | |||

# F. Alonso-Fernandez, J. Bigun, “Iris Boundaries Segmentation Using the Generalized Structure Tensor. A Study on the Effects on Image Degradation”, Proc. [https://sites.google.com/a/nd.edu/btas_2012/ Intl Conf on Biometrics: Theory, Apps and Systems, BTAS,] Washington DC, September 23-26, 2012 ([http://hh.diva-portal.org/smash/record.jsf?searchId=2&pid=diva2:545745 link to the publication]) | # F. Alonso-Fernandez, J. Bigun, “Iris Boundaries Segmentation Using the Generalized Structure Tensor. A Study on the Effects on Image Degradation”, Proc. [https://sites.google.com/a/nd.edu/btas_2012/ Intl Conf on Biometrics: Theory, Apps and Systems, BTAS,] Washington DC, September 23-26, 2012 ([http://hh.diva-portal.org/smash/record.jsf?searchId=2&pid=diva2:545745 link to the publication]) | ||

Revision as of 13:40, 5 August 2015

| |

| Iris Segmentation Code | |

|---|---|

| Contact: Fernando Alonso-Fernandez |

Introduction

This page provides a software code for iris segmentation based on the Generalized Structure Tensor (GST), based on publications [1] and [2] (below in this page). Its development was made mainly during the 2011-2013 FP7 Marie Curie IEF Action BIO-DISTANCE, but it contains some improvements and add-ons incorporated afterwards at different points in time. We keep working constantly in this topic, so if you are interested, it is maybe worth checking from time to time if new improvements have been added to the code.

The software accepts an iris image as input, and outputs segmentation information of the input iris image (see below for more information). It is capable of handling images acquired both in near-infrared (NIR) and visible (VW) spectrum.

The GST code consist of the following steps (some can be deactivated or customized, please read the documentation included with the code):

1) Image downsampling for speed purposes. This will not jeopardize accuracy, since the detected iris circles are later fitted to the irregular iris contours, so any loss of resolution in iris circles detection due to downsampling is compensated.

2) Contrast normalization based on imadjust (Matlab function). This increases the image contrast, spreading grey values fully in the 0-255 range.

3) Specular reflection removal based on the method published in reference 3.

4) Computation of the image frequency based on the method published in reference 2. This helps to customize inner parameters of steps 5-8 to the input image.

5) Adaptive eyelash removal using the image frequency, as indicated in reference 2. The method is based on p-rank filters as published in reference [4]. Eyelashes are removed since they create strong vertical edges that may mislead the filters used for eye center estimation and iris segmentation in steps 7 and 9.

6) Adaptive edge map computation using the image frequency, as indicated in reference 2. Edge map is the basis for eye center estimation and iris boundaries detection, see references [1, 2] for further details.

7) Estimation of the eye center based on the method published in reference 2 using circular symmetry filters. The estimated center is used to mask candidate regions for the centers of iris circles, helping to improve detection accuracy in step 9.

8) Detection of eyelids based on linear symmetry detection of horizontal edges (unpublished and unoptimized, only return a straight line). The detected eyelids are used to mask candidate regions for the centers of iris circles too, helping to improve detection accuracy in step 9.

9) Detection of iris boundaries based on the method published in reference 1 using the Generalized Structure Tensor (GST). In NIR images, the inner (pupil) circle is detected first, while in VW images, the outer (sclera) filter is detected first. This is because in NIR images, pupil-to-iris transition is sharper than iris-to-sclera transition, thus more reliable to detect in the first place. The opposite happens with VW images.

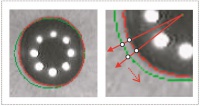

10) Irregular contour fitting based on active contours as published in reference reference 5.

The code outputs the following information of the input iris image:

- Segmentation circles of the iris region (inner and outer circle) as well as eyelids (in the form of a straight line)

- Irregular (non-circular) iris boundaries fitted by active contours

- Estimated eye center (computed at the beginning and used to assist in the segmentation)

- Intermediate images after contrast normalization, specular reflection removal, and eyelash removal

- Complex edge map of the input image

- Binary segmentation mask

Terms and Conditions

This code has not any warranty and it is provided for research purposes only.

The code is provided in the form of executables compiled with Matlab r2009b 32 bits (mcc command) under Windows 8.1. It accepts as input grayscale and RGB images in any format supported by Matlab "imread" (uint8 only).

Certain parameters of the code are customizable, please read the documentation included with the code for more information.

Please remember to cite reference [1] (below) if you make use of this code in any publication.

By downloading the code, you agree with the terms and conditions indicated above.

Download the code here (latest release: July 2015)

People responsible

- Fernando Alonso-Fernandez (contact person)

- Josef Bigun

References

- F. Alonso-Fernandez, J. Bigun, “Iris Boundaries Segmentation Using the Generalized Structure Tensor. A Study on the Effects on Image Degradation”, Proc. Intl Conf on Biometrics: Theory, Apps and Systems, BTAS, Washington DC, September 23-26, 2012 (link to the publication)

- F. Alonso-Fernandez, J. Bigun, “Near-infrared and visible-light periocular recognition with Gabor features using frequency-adaptive automatic eye detection”, IET Biometrics, Volume 4, Issue 2, pp. 74-89, June 2015 (link to the publication in IET Biometrics)

- C. Rathgeb, A. Uhl, P. Wild, "Iris Biometrics. From Segmentation to Template Security", Springer, 2013

- Z. He, T. Tan, Z. Sun, X. Qiu, "Toward accurate and fast iris segmentation for iris biometrics", IEEE Transactions on Pattern Analysis and Machine Intelligence, 2010, 31, (9), pp. 1295–1307 (link to the publication in IEEE Xplore)

- J. Daugman, "New methods in iris recognition", IEEE Transactions on Systems, Man, and Cybernetics, Part B: Cybernetics, 37(5), 2007 (link to the publication in IEEE Xplore) (link to the publication in the author´s web site)