Difference between revisions of "Iris Segmentation Groundtruth"

| (32 intermediate revisions by one user not shown) | |||

| Line 1: | Line 1: | ||

| − | '''Segmentation is a critical part in iris recognition systems''', since errors in this initial stage are propagated to subsequent processing stages. Therefore, the performance of iris segmentation algorithms is paramount to the performance of the overall system. In order to properly evaluate and develop iris segmentation algorithm, especially under difficult conditions like off-angle and significant occlusions or bad lighting, '''it is beneficial to directly assess the segmentation algorithm'''. Currently, when evaluating the performance of iris segmentation algorithms, this is '''mostly done by utilizing the recognition rate''', and consequently the overall performance of the biometric system. However, the overall recognition performance is not only affected by the segmentation accuracy, but also by the performance of the other subsystems based on possible suboptimal segmentation of the iris. As such it is '''difficult to differentiate between defects in the iris segmentation system and effects which might be introduced later''' in the system (you can read more about this phenomenon in our ICB 2013 publication [http://hh.diva-portal.org/smash/record.jsf?searchId=2&pid=diva2:607425 here]). | + | {{Infobox |

| + | |image = [[Image:Circularity1.jpg|200px]] | ||

| + | |header1 = Iris Segmentation Groundtruth | ||

| + | |header2 = '''Contact:''' [[Fernando Alonso-Fernandez]] | ||

| + | }} | ||

| + | |||

| + | {| class="wikitable" | ||

| + | !colspan="1" | The content of this page has been moved to our [https://github.com/HalmstadUniversityBiometrics/Iris-Segmentation-Groundtruth-Database Github repository]. You can read this same content and download the files there. | ||

| + | |} | ||

| + | |||

| + | ='''Introduction'''= | ||

| + | |||

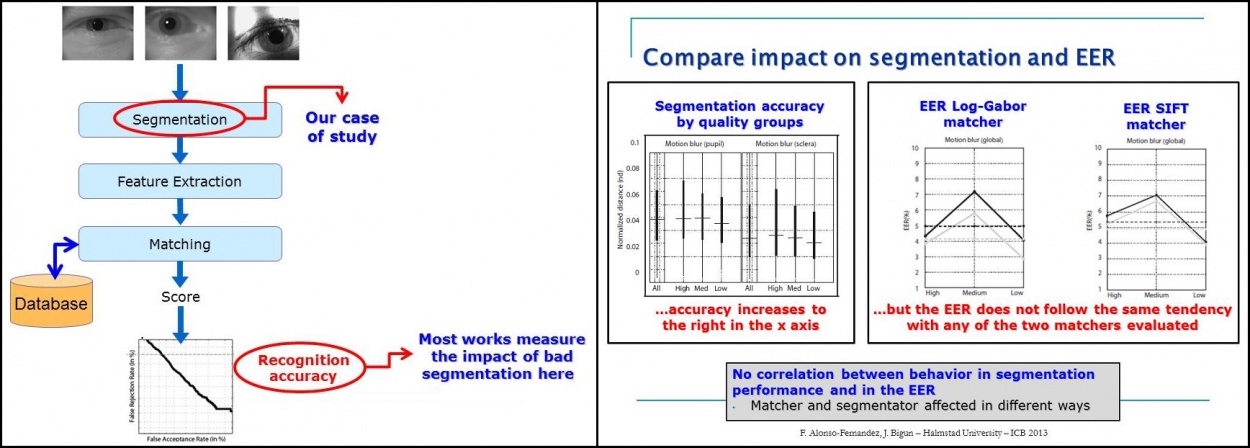

| + | '''Segmentation is a critical part in iris recognition systems''', since errors in this initial stage are propagated to subsequent processing stages. Therefore, the performance of iris segmentation algorithms is paramount to the performance of the overall system. In order to properly evaluate and develop iris segmentation algorithm, especially under difficult conditions like off-angle and significant occlusions or bad lighting, '''it is beneficial to directly assess the segmentation algorithm'''. Currently, when evaluating the performance of iris segmentation algorithms, this is '''mostly done by utilizing the recognition rate''', and consequently the overall performance of the biometric system. However, the overall recognition performance is not only affected by the segmentation accuracy, but also by the performance of the other subsystems based on possible suboptimal segmentation of the iris. As such it is '''difficult to differentiate between defects in the iris segmentation system and effects which might be introduced later''' in the system (you can read more about this phenomenon in our ICB 2013 publication [http://hh.diva-portal.org/smash/record.jsf?searchId=2&pid=diva2:607425 here], which is summarized in the following figures). | ||

| + | |||

| + | <br /> | ||

| + | |||

| + | [[Image:2013_ICB-FAlonso_v2.jpg|center|1250px]] | ||

| + | |||

| + | <br /> | ||

| + | |||

| + | |||

| + | ='''Dataset overview'''= | ||

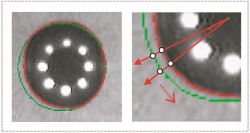

For these reasons, with the purpose of allowing assessment of iris segmentation algorithms with independence of the whole biometric system, '''we have generated an iris segmentation ground truth database'''. We have segmented '''a total of 12,621 iris images from 7 databases'''. This data is now made publicly available, and can be used to analyse existing and test new iris segmentation algorithms. The iris segmentation database ('''IRISSEG''') contains a mask for each iris image in form of parameters and a method to extract the mask. The database is partitioned into two datasets based on the shapes used for segmenting the iris and eyelid, the '''CC and EP dataset'''. For the CC dataset the parameters define circles which give the iris boundaries and eyelid maskings. For the EP dataset the parameters define ellipses for the iris and polynomials for the eyelid. Note that the eyelid parametrization was done in a way to ensure the best possible separation of iris and eyelids in the iris region, i.e. outside the iris region the parametrization is not necessarily accurate. Eyelashes occlusion is not included in the segmentation data. In addition, only iris segmentation data is provided in the IRISSEG dataset, not the original eye image databases, since they are not owned by us. A link to the actual iris databases is included in each case, please refer to them in order to obtain the original databases. | For these reasons, with the purpose of allowing assessment of iris segmentation algorithms with independence of the whole biometric system, '''we have generated an iris segmentation ground truth database'''. We have segmented '''a total of 12,621 iris images from 7 databases'''. This data is now made publicly available, and can be used to analyse existing and test new iris segmentation algorithms. The iris segmentation database ('''IRISSEG''') contains a mask for each iris image in form of parameters and a method to extract the mask. The database is partitioned into two datasets based on the shapes used for segmenting the iris and eyelid, the '''CC and EP dataset'''. For the CC dataset the parameters define circles which give the iris boundaries and eyelid maskings. For the EP dataset the parameters define ellipses for the iris and polynomials for the eyelid. Note that the eyelid parametrization was done in a way to ensure the best possible separation of iris and eyelids in the iris region, i.e. outside the iris region the parametrization is not necessarily accurate. Eyelashes occlusion is not included in the segmentation data. In addition, only iris segmentation data is provided in the IRISSEG dataset, not the original eye image databases, since they are not owned by us. A link to the actual iris databases is included in each case, please refer to them in order to obtain the original databases. | ||

| + | |||

| + | <br /> | ||

| + | |||

| + | [[Image:Circularity1.jpg|center|250px]] | ||

| + | |||

| + | <br /> | ||

'''This page describes the CC dataset (IRISSEG-CC Dataset)''', which has been generated by '''Halmstad University'''. It contains ground truth data of: | '''This page describes the CC dataset (IRISSEG-CC Dataset)''', which has been generated by '''Halmstad University'''. It contains ground truth data of: | ||

| − | * BioSec Multimodal Biometric Database Baseline (1200 iris images) | + | * '''BioSec''' Multimodal Biometric Database Baseline (1200 iris images) |

| − | * Casia Iris v3 Interval Database (2655 iris images) | + | * '''Casia''' Iris v3 Interval Database (2655 iris images) |

| − | * MobBIO Database, iris train dataset (800 iris images) | + | * '''MobBIO''' Database, iris train dataset (800 iris images) |

| − | + | <br /> | |

| − | * Casia Iris v4 Interval Database (2639 iris images) | + | The '''EP dataset''' has been generated by the '''University of Salzburg''', and it can be obtained [http://www.wavelab.at/sources/Hofbauer14b/ here]. The EP dataset contains ground truth data of: |

| − | * IIT Delhi Iris Database version 1.0 (2240 iris images) | + | |

| − | * Notre Dame IRIS-0405 Database (837 iris images) | + | * '''Casia''' Iris v4 Interval Database (2639 iris images) |

| − | * UBIRIS v2 Iris Database (2250 iris images) | + | * '''IIT Delhi''' Iris Database version 1.0 (2240 iris images) |

| + | * '''Notre Dame''' IRIS-0405 Database (837 iris images) | ||

| + | * '''UBIRIS''' v2 Iris Database (2250 iris images) | ||

| + | |||

| + | <br /> | ||

A '''summary''' of the '''IRISSEG''' database is given in the following table: | A '''summary''' of the '''IRISSEG''' database is given in the following table: | ||

| + | |||

{| class="wikitable" | {| class="wikitable" | ||

| Line 91: | Line 123: | ||

|} | |} | ||

| − | = | + | <br /> |

| + | |||

| + | |||

| + | = '''Halmstad database of iris segmentation ground truth (IRISSEG-CC Dataset)''' = | ||

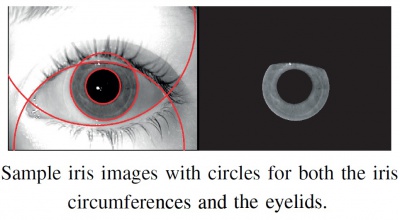

The parameters given in this dataset define circles (centre and radius) which give the iris boundaries and eyelid masks. Three points of each circle have been manually marked by an operator, which are used to compute the corresponding radius and centre. An example is as follows: | The parameters given in this dataset define circles (centre and radius) which give the iris boundaries and eyelid masks. Three points of each circle have been manually marked by an operator, which are used to compute the corresponding radius and centre. An example is as follows: | ||

| − | [[Image:sample_mask.jpg|400px]] | + | [[Image:sample_mask.jpg|center|400px]] |

| + | |||

| + | Ground truth segmentation data from the following databases are available in the IRISSEG-CC Dataset. | ||

| + | |||

| + | Please note that this repository only contains groundtruth data. We do not provide here the original iris databases, since we are not the owners of such databases (you must obtain them from their rightful owners). Below we provide links to the repositories of the iris databases for your convenience (we do not have control of the links to the original databases, please do a Google or similar search to try to find the database website if the links below do not work anymore). | ||

| − | + | '''Please remember to cite references [1] and [2] (below) on any work made public based directly or indirectly on the IRISSEG-CC Dataset''' (do not forget also to cite the appropriate publications of the original eye image databases, as indicated by their owners). | |

=== BioSec Multimodal Biometric Database Baseline (iris part) === | === BioSec Multimodal Biometric Database Baseline (iris part) === | ||

| Line 104: | Line 143: | ||

'''Link to the original database''': [http://atvs.ii.uam.es/databases.jsp click here] | '''Link to the original database''': [http://atvs.ii.uam.es/databases.jsp click here] | ||

| − | + | <!-- | |

| − | '''Ground truth segmentation files''': [http:// | + | '''Ground truth segmentation files''': [http://wiki.hh.se/caisr/index.php/File:Biosec_baseline_iris_groundtruth.zip click here] |

| − | + | --> | |

=== Casia Iris v3 Interval Database === | === Casia Iris v3 Interval Database === | ||

| Line 113: | Line 152: | ||

'''Link to the original database''': [http://www.cbsr.ia.ac.cn/IrisDatabase.htm click here] | '''Link to the original database''': [http://www.cbsr.ia.ac.cn/IrisDatabase.htm click here] | ||

| − | + | <!-- | |

| − | '''Ground truth segmentation files''': [http:// | + | '''Ground truth segmentation files''': [http://wiki.hh.se/caisr/index.php/File:CASIA-IrisV3-Interval_groundtruth.zip click here] |

| − | + | --> | |

=== MobBIO Database (iris train dataset) === | === MobBIO Database (iris train dataset) === | ||

| Line 121: | Line 160: | ||

The iris training subset of the MobBIO database, containing 800 images of 240x200 pixels from 100 subjects, was fully segmented. Images were captured with the Asus Eee Pad Transformer TE300T Tablet (webcam in visible light) in two different lightning conditions, with variable eye orientations and occlusion levels, resulting in a large variability of acquisition conditions. Distance to the camera was kept constant, however. | The iris training subset of the MobBIO database, containing 800 images of 240x200 pixels from 100 subjects, was fully segmented. Images were captured with the Asus Eee Pad Transformer TE300T Tablet (webcam in visible light) in two different lightning conditions, with variable eye orientations and occlusion levels, resulting in a large variability of acquisition conditions. Distance to the camera was kept constant, however. | ||

| − | '''Link to the original database''': [ | + | '''Link to the original database''': [https://web.fe.up.pt/~mobbio2013// click here] |

| + | <!-- | ||

| + | '''Ground truth segmentation files''': [http://wiki.hh.se/caisr/index.php/File:MobBIOTrainDataset_iris_groundtruth.zip click here] | ||

| + | --> | ||

| + | |||

| + | |||

| + | '''DOWNLOAD THE GROUNDTRUTH FILES''' | ||

| + | |||

| + | Please download the groundtruth files in our Github repository, [https://github.com/HalmstadUniversityBiometrics/Iris-Segmentation-Groundtruth-Database here]. | ||

| + | |||

| + | |||

| + | === Iris segmentation software code === | ||

| + | |||

| + | You may are also interested in our [http://wiki.hh.se/caisr/index.php/Iris_Segmentation_Code_Based_on_the_GST iris segmentation code] based on the Generalized Structure Tensor (GST). | ||

| + | |||

| + | |||

| + | === People responsible === | ||

| − | + | * [http://wiki.hh.se/caisr/index.php/Fernando_Alonso-Fernandez Fernando Alonso-Fernandez] (contact person) | |

| + | * [http://wiki.hh.se/caisr/index.php/Josef_Bigun Josef Bigun] | ||

=== References === | === References === | ||

| − | '''Please remember to cite the following references on any work made public based directly or indirectly on the IRISSEG-CC Dataset''' (do not forget also to cite the appropriate publications of the original | + | '''Please remember to cite the following references on any work made public based directly or indirectly on the IRISSEG-CC Dataset''' (do not forget also to cite the appropriate publications of the original iris image databases, as indicated by their owners): |

| − | # Heinz Hofbauer, Fernando Alonso-Fernandez, Peter Wild, Josef Bigun and Andreas Uhl, “A Ground Truth for Iris Segmentation”, Proc. [ | + | # Fernando Alonso-Fernandez, Josef Bigun, “Near-infrared and visible-light periocular recognition with Gabor features using frequency-adaptive automatic eye detection”, [http://digital-library.theiet.org/content/journals/iet-bmt, IET Biometrics], Volume 4, Issue 2, pp. 74-89, June 2015 ([http://digital-library.theiet.org/content/journals/10.1049/iet-bmt.2014.0038 link to the publication in IET Biometrics]) |

| + | # Heinz Hofbauer, Fernando Alonso-Fernandez, Peter Wild, Josef Bigun and Andreas Uhl, “A Ground Truth for Iris Segmentation”, Proc. [https://iapr.org/archives/icpr2014/ 22nd International Conference on Pattern Recognition, ICPR,] Stockholm, August 24-28, 2014 ([http://hh.diva-portal.org/smash/record.jsf?searchId=1&pid=diva2:710627 link to the publication]) | ||

| − | '''You may also want to read our other publications making use of the IRISSEG database''' (below) or to read more about '''our iris research''' ([http:// | + | '''You may also want to read our other publications making use of the IRISSEG database''' (below) or to read more about '''our iris research''' ([http://wiki.hh.se/caisr/index.php/BIO-DISTANCE here]). You are particularly invited to read our '''ICB 2013 paper''', where we demonstrate that segmentation and matching performance are not necessarily affected by the same factors, with segmentation errors due to low image quality not necessarily revealed by the matcher. '''You are also invited to send your own publications of your research making use of the IRISSEG database to [[Fernando Alonso-Fernandez]] if you would like to see them here''': |

| − | + | * F. Alonso-Fernandez, J. Bigun, “Eye Detection by Complex Filtering for Periocular Recognition”, Proc. [http://www.um.edu.mt/events/iwbf2014/ 2nd COST IC1106 International Workshop on Biometrics and Forensics, IWBF,] Valletta, Malta, March 27-28, 2014 ([http://hh.diva-portal.org/smash/record.jsf?searchId=1&pid=diva2:697661 link to the publication]) | |

| − | + | * F. Alonso-Fernandez, J. Bigun, “Quality Factors Affecting Iris Segmentation and Matching”, Proc. [http://atvs.ii.uam.es/icb2013 6th IAPR Intl Conf on Biometrics, ICB,] Madrid, June 4-7, 2013 ([http://hh.diva-portal.org/smash/record.jsf?searchId=2&pid=diva2:607425 link to the publication]) | |

| − | + | * F. Alonso-Fernandez, J. Bigun, “Periocular Recognition Using Retinotopic Sampling and Gabor Decomposition”, Proc. [https://sites.google.com/site/wiaf2012/ Intl Workshop “What's in a Face?” WIAF,] in conjunction with the [http://eccv2012.unifi.it/ European Conference on Computer Vision, ECCV,] Springer LNCS-7584, pp. 309-318, Firenze, Italy, October 7-13, 2012 ([http://hh.diva-portal.org/smash/record.jsf?searchId=2&pid=diva2:545741 link to the publication]) | |

| − | + | * F. Alonso-Fernandez, J. Bigun, “Iris Boundaries Segmentation Using the Generalized Structure Tensor. A Study on the Effects on Image Degradation”, Proc. [https://sites.google.com/a/nd.edu/btas_2012/ Intl Conf on Biometrics: Theory, Apps and Systems, BTAS,] Washington DC, September 23-26, 2012 ([http://hh.diva-portal.org/smash/record.jsf?searchId=2&pid=diva2:545745 link to the publication]) | |

Latest revision as of 13:46, 15 September 2021

| |

| Iris Segmentation Groundtruth | |

|---|---|

| Contact: Fernando Alonso-Fernandez |

| The content of this page has been moved to our Github repository. You can read this same content and download the files there. |

|---|

Contents

[hide]Introduction

Segmentation is a critical part in iris recognition systems, since errors in this initial stage are propagated to subsequent processing stages. Therefore, the performance of iris segmentation algorithms is paramount to the performance of the overall system. In order to properly evaluate and develop iris segmentation algorithm, especially under difficult conditions like off-angle and significant occlusions or bad lighting, it is beneficial to directly assess the segmentation algorithm. Currently, when evaluating the performance of iris segmentation algorithms, this is mostly done by utilizing the recognition rate, and consequently the overall performance of the biometric system. However, the overall recognition performance is not only affected by the segmentation accuracy, but also by the performance of the other subsystems based on possible suboptimal segmentation of the iris. As such it is difficult to differentiate between defects in the iris segmentation system and effects which might be introduced later in the system (you can read more about this phenomenon in our ICB 2013 publication here, which is summarized in the following figures).

Dataset overview

For these reasons, with the purpose of allowing assessment of iris segmentation algorithms with independence of the whole biometric system, we have generated an iris segmentation ground truth database. We have segmented a total of 12,621 iris images from 7 databases. This data is now made publicly available, and can be used to analyse existing and test new iris segmentation algorithms. The iris segmentation database (IRISSEG) contains a mask for each iris image in form of parameters and a method to extract the mask. The database is partitioned into two datasets based on the shapes used for segmenting the iris and eyelid, the CC and EP dataset. For the CC dataset the parameters define circles which give the iris boundaries and eyelid maskings. For the EP dataset the parameters define ellipses for the iris and polynomials for the eyelid. Note that the eyelid parametrization was done in a way to ensure the best possible separation of iris and eyelids in the iris region, i.e. outside the iris region the parametrization is not necessarily accurate. Eyelashes occlusion is not included in the segmentation data. In addition, only iris segmentation data is provided in the IRISSEG dataset, not the original eye image databases, since they are not owned by us. A link to the actual iris databases is included in each case, please refer to them in order to obtain the original databases.

This page describes the CC dataset (IRISSEG-CC Dataset), which has been generated by Halmstad University. It contains ground truth data of:

- BioSec Multimodal Biometric Database Baseline (1200 iris images)

- Casia Iris v3 Interval Database (2655 iris images)

- MobBIO Database, iris train dataset (800 iris images)

The EP dataset has been generated by the University of Salzburg, and it can be obtained here. The EP dataset contains ground truth data of:

- Casia Iris v4 Interval Database (2639 iris images)

- IIT Delhi Iris Database version 1.0 (2240 iris images)

- Notre Dame IRIS-0405 Database (837 iris images)

- UBIRIS v2 Iris Database (2250 iris images)

A summary of the IRISSEG database is given in the following table:

| IRISSEG-CC Dataset (Halmstad University) | ||||

|---|---|---|---|---|

|

Database |

Available samples |

Image size |

Sensor |

Other info |

| BioSec | 1200 images | 640x480 | LG IrisAccess EOU3000 close-up near-infrared camera | Office-like environment |

| Casia Iris v3 Interval | 2655 images | 320x280 | CASIA close-up near-infrared camera | Indoor, extremely clear iris texture details |

| MobBIO | 800 images | 240x200 | Asus Eee Pad Transformer TE300T Tablet (webcam) | Two lightning conditions, variable eye orientation and occlusion |

| IRISSEG-EP Dataset (University of Salzburg) | ||||

|---|---|---|---|---|

|

Database |

Available samples |

Image size |

Sensor |

Other info |

| Casia Iris v4 Interval | 2639 images | 320x280 | CASIA close-up near-infrared camera | Indoor, extremely clear iris texture details |

| IIT Delhi 1.0 | 2240 images | 320x240 | JIRIS JPC1000, digital CMOS near-infrared camera | Indoor, frontal view (no off-angle) |

| Notre Dame 0405 | 837 images | 640x480 | LG 2200 close-up near-infrared camera | Indoor, images with off-angle, blur, interlacing, and occlusion |

| UBIRIS v2 | 2250 images | 400x300 | Nikon E5700 digital camera | Natural luminosity. Heterogeneity in reflections, contrast, focus and occlusions. Frontal and off-angle from various distances. |

Halmstad database of iris segmentation ground truth (IRISSEG-CC Dataset)

The parameters given in this dataset define circles (centre and radius) which give the iris boundaries and eyelid masks. Three points of each circle have been manually marked by an operator, which are used to compute the corresponding radius and centre. An example is as follows:

Ground truth segmentation data from the following databases are available in the IRISSEG-CC Dataset.

Please note that this repository only contains groundtruth data. We do not provide here the original iris databases, since we are not the owners of such databases (you must obtain them from their rightful owners). Below we provide links to the repositories of the iris databases for your convenience (we do not have control of the links to the original databases, please do a Google or similar search to try to find the database website if the links below do not work anymore).

Please remember to cite references [1] and [2] (below) on any work made public based directly or indirectly on the IRISSEG-CC Dataset (do not forget also to cite the appropriate publications of the original eye image databases, as indicated by their owners).

BioSec Multimodal Biometric Database Baseline (iris part)

The BioSec database has 3,200 iris images of 640x480 pixels from 200 subjects acquired with a LG IrisAccess EOU3000 close-up infrared iris camera. Here, we use a subset comprising data from 75 subjects (totalling 1,200 iris images), for which iris and eyelids segmentation groundtruth is available.

Link to the original database: click here

Casia Iris v3 Interval Database

The CASIA-Iris-Interval subset of the CASIA v3.0 database, containing 2655 iris images of 320x280 pixels from 249 subjects, was fully segmented. Images were acquired with a close-up infrared iris camera in an indoor environment, having images with very clear iris texture details thanks to a circular NIR LED array.

Link to the original database: click here

MobBIO Database (iris train dataset)

The iris training subset of the MobBIO database, containing 800 images of 240x200 pixels from 100 subjects, was fully segmented. Images were captured with the Asus Eee Pad Transformer TE300T Tablet (webcam in visible light) in two different lightning conditions, with variable eye orientations and occlusion levels, resulting in a large variability of acquisition conditions. Distance to the camera was kept constant, however.

Link to the original database: click here

DOWNLOAD THE GROUNDTRUTH FILES

Please download the groundtruth files in our Github repository, here.

Iris segmentation software code

You may are also interested in our iris segmentation code based on the Generalized Structure Tensor (GST).

People responsible

- Fernando Alonso-Fernandez (contact person)

- Josef Bigun

References

Please remember to cite the following references on any work made public based directly or indirectly on the IRISSEG-CC Dataset (do not forget also to cite the appropriate publications of the original iris image databases, as indicated by their owners):

- Fernando Alonso-Fernandez, Josef Bigun, “Near-infrared and visible-light periocular recognition with Gabor features using frequency-adaptive automatic eye detection”, IET Biometrics, Volume 4, Issue 2, pp. 74-89, June 2015 (link to the publication in IET Biometrics)

- Heinz Hofbauer, Fernando Alonso-Fernandez, Peter Wild, Josef Bigun and Andreas Uhl, “A Ground Truth for Iris Segmentation”, Proc. 22nd International Conference on Pattern Recognition, ICPR, Stockholm, August 24-28, 2014 (link to the publication)

You may also want to read our other publications making use of the IRISSEG database (below) or to read more about our iris research (here). You are particularly invited to read our ICB 2013 paper, where we demonstrate that segmentation and matching performance are not necessarily affected by the same factors, with segmentation errors due to low image quality not necessarily revealed by the matcher. You are also invited to send your own publications of your research making use of the IRISSEG database to Fernando Alonso-Fernandez if you would like to see them here:

- F. Alonso-Fernandez, J. Bigun, “Eye Detection by Complex Filtering for Periocular Recognition”, Proc. 2nd COST IC1106 International Workshop on Biometrics and Forensics, IWBF, Valletta, Malta, March 27-28, 2014 (link to the publication)

- F. Alonso-Fernandez, J. Bigun, “Quality Factors Affecting Iris Segmentation and Matching”, Proc. 6th IAPR Intl Conf on Biometrics, ICB, Madrid, June 4-7, 2013 (link to the publication)

- F. Alonso-Fernandez, J. Bigun, “Periocular Recognition Using Retinotopic Sampling and Gabor Decomposition”, Proc. Intl Workshop “What's in a Face?” WIAF, in conjunction with the European Conference on Computer Vision, ECCV, Springer LNCS-7584, pp. 309-318, Firenze, Italy, October 7-13, 2012 (link to the publication)

- F. Alonso-Fernandez, J. Bigun, “Iris Boundaries Segmentation Using the Generalized Structure Tensor. A Study on the Effects on Image Degradation”, Proc. Intl Conf on Biometrics: Theory, Apps and Systems, BTAS, Washington DC, September 23-26, 2012 (link to the publication)