BIO-DISTANCE

Biometrics at a Distance

| BIO-DISTANCE | |

| Project start: | |

|---|---|

| 7 January 2011 | |

| Project end: | |

| 30 June 2013 | |

| More info (PDF): | |

| [[media: | pdf]] | |

| Contact: | |

| Fernando Alonso-Fernandez | |

| Application Area: | |

| Biometrics | |

Involved internal personnel

| |

| |

Involved external personnel

| |

Involved partners

| |

-

| |

Abstract

Despite the practical importance and the advantages of biometric solutions to the task of verifying personal identity, their adoption has proved to be slower that predicted. Biometrics on the move is a hottest research topic aimed to acquire biometric data at a distance as a person walks by detection equipment. This drastically reduces the need of user’s cooperation, achieving low intrusiveness and thus, high acceptance and transparency. With these ideas in mind, the main objective of this project is to investigate a number of activities aimed to make biometric technologies applicable to data acquired at a distance and/or on the move. We propose the use of face and iris as the reference modalities, being the two traits that are attracting more efforts thanks to the possibility of their simultaneous acquisition. This project is an integrated approach that covers the whole structure of a biometric system, including basic research and algorithm development for the different stages of the system, as well as practical results through case studies implementation and evaluation. To accomplish the overall objectives, a number of challenges need to be overcome, which constitute the specific research objectives.

FP7-PEOPLE-2009-IEF Marie Curie Action Project No: 254261

Iris Quality Measures

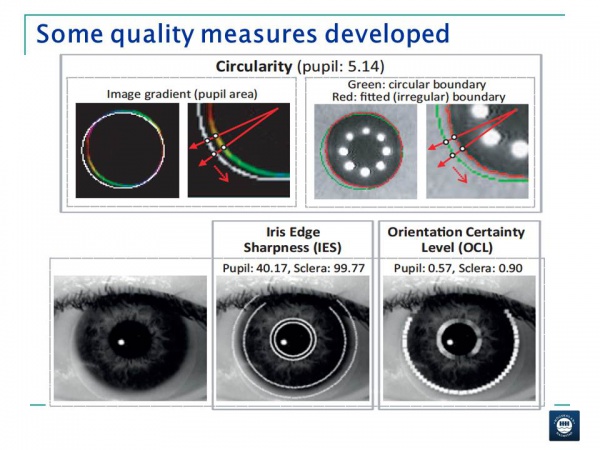

Several quality measures of the iris region have been implemented and evaluated, both locally (iris boundaries) and globally (whole eye region), including the proposal of novel algorithms. We have aimed to quantify image properties reported in the literature as having the greatest influence in iris recognition accuracy, in support of the standard ISO/IEC 29794-6 Biometric Sample Quality (part 6: Iris image). The algorithms implemented include measurements of: defocus blur (1 algorithm), motion blur and image interlace (1 algorithm), contrast of iris boundaries (2 algorithms), circularity of iris boundaries (1 algorithm), gray scale spread (2 algorithms), and occlusion (1 algorithm).

The algorithms are described in publication [2] below. The following is a slide from the conference presentation:

Iris Detection and Segmentation Algorithms

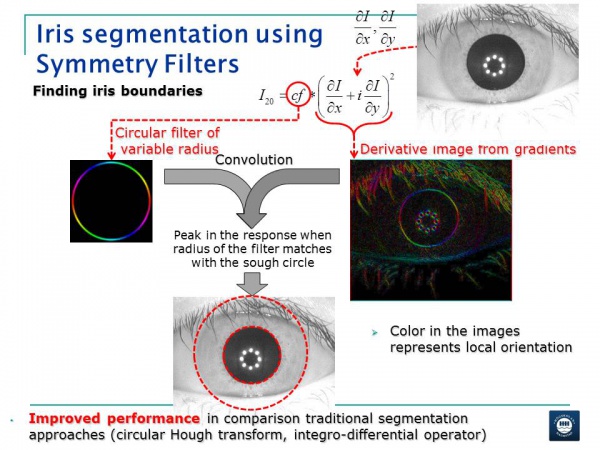

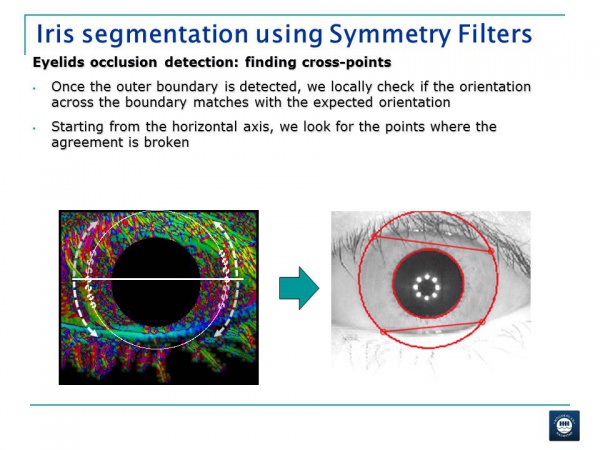

Iris segmentation algorithm

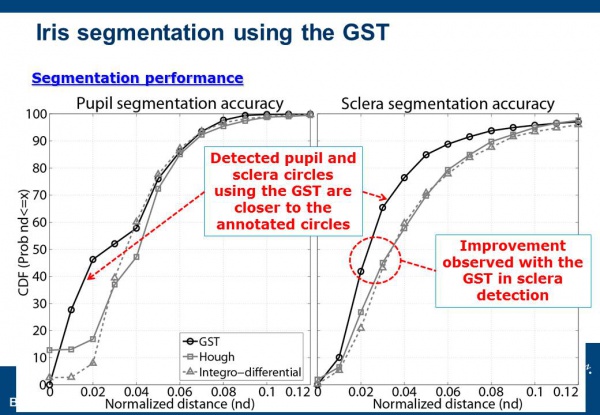

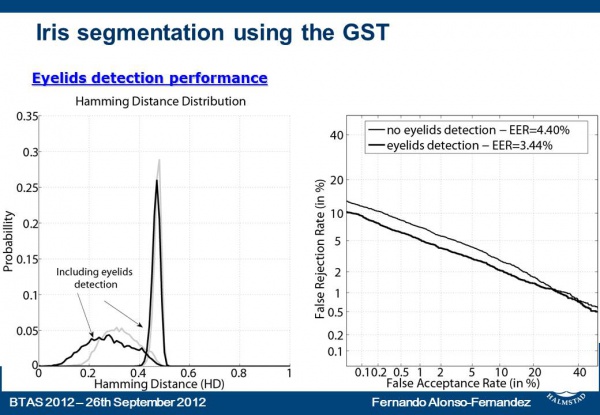

A complete system that allows the segmentation of iris boundaries and the detection of occluding eyelids has been developed. It is based on the Generalized Structure Tensor (GST) theory, introduced 20 years ago by Prof. Josef Bigun to analyze local orientation of images. Using manual segmentation as benchmark for our experiments, our system has shown a considerable improvement over popular methods (such as the Circular Hough Transform or the Integro-Differential operator) that do not analyze local orientations of the image. The results obtained show the validity of our proposed approach and demonstrate that the GST constitutes an alternative to classic iris segmentation approaches.

The GST segmentation algorithm is described in publications [5] and [6] below. The following are some slides from the conference presentations:

Impact of quality components in the iris segmentation

We have also analyzed the impact of image defocus and motion blur in the segmentation. Reported results shows the superior resilience of the GST compared with the popular segmentation methods indicated, with similar performance in pupil detection (inner iris boundary), and clearly better performance in sclera detection (outer iris boundary) for all levels of degradation. It is worth noting here than the outer iris boundary (sclera) is usually more difficult to detect than the inner iris boundary (pupil). Since the pupil is generally darker than the iris, there will be a sharper transition between the pupil and the iris, which is easier to detect than the softer transition between the iris and the sclera. Therefore, our GST system contributes with a better detection of sclera boundaries than traditional systems.

This quality study is described in publication [5] below.

The impact of the eight quality components of above in the segmentation performance has also been studied. Quality measures are computed locally (around the iris boundaries) and some of them are also computed globally (in the whole image of the eye region). It has been found that local quality metrics are better predictors of the segmentation accuracy than global metrics, despite the obvious limitation of requiring segmentation. Some measures also behave differently when they are computed locally or globally.

This quality study is described in publication [2] below.

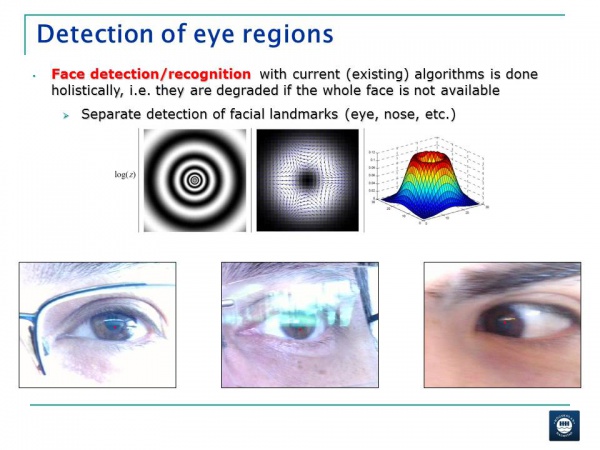

Detection of eye regions

Accurate segmentation of iris boundaries demands the use of good quality images acquired in more or less controlled conditions. With the purpose of going towards a less-controlled and distant acquisition, we have developed a system for detection of the complete eye region. The target is to make detection of human faces possible over a wide range of distances, even when the iris texture cannot be reliably obtained (low resolution, off-angle, etc.) or under partial face occlusion (close distances, viewing angle, etc.). This is a necessary step, for example, to zoom into the iris texture and segment the iris boundaries, or to allow tracking of human faces when the whole face is not available. Existing face detection algorithms model faces in a holistic manner, so they fail in case of face occlusion or sometimes simply with arbitrary (non-frontal) poses. On the other hand, the iris texture can be very difficult to obtain in distant acquisition. The task proposed is an intermediate step, i.e. detection of the eye region, not relying on having neither the full face available nor a well-defined iris texture. The approach for this purpose has been the use of symmetry filters and the Generalized Structure Tensor theory (as in the segmentation of iris boundaries above, where we have used circular filters only). We have started with the use of symmetry filters capable of detecting patterns of concentric circles. Our assumption is that the region around the eye center shows this kind of pattern, and even considering that the iris circles are not exactly concentric, this approach has provided promising results. This kind of filters has been evaluated with images acquired with a webcam from a portable tablet PC, showing strong detection capabilities of eye regions.

The following is a slide showing some detection results on eye images from the MobBIO database:

Iris Feature Extraction and Matching Algorithms

Impact of quality components in the matching stage

We have also evaluated the impact of quality components (using the measures developed above) in the performance of two iris matchers based on Log-Gabor wavelets and SIFT keypoints (the first one is a popular, publicly available iris matcher, while the second one is based on public SIFT source code which has been adapted to work on iris images). We observe that the matchers are also sensitive to quality variations, but not necessarily in the same way than the segmentation algorithm. Also, the SIFT matcher is observed to be more resilient to segmentation inaccuracies. In this sense, errors in the segmentation may be hidden by the matcher, pointing out the importance of evaluating also the precision of iris segmentation, as proposed in this project, rather than focusing on recognition accuracy only.

This quality study is described in publication [2] below.

It is relevant to note that the success of iris segmentation is crucial for the good performance of iris recognition systems. Previous research works treat iris recognition systems as a black box, evaluating the impact of quality factors at the output of the system (i.e. by looking at the recognition performance). The same procedure is traditionally followed to evaluate new developments in the segmentation stage. This project has brought innovative aspects in the sense that it has focused on evaluating directly the precision of the segmentation. The observed results, with segmentator and matcher not necessary behaving in the same way, support the proposed evaluation framework. Experiments also show that quality measures are not necessarily correlated. Quality is intrinsically multi-dimensional and it is affected by factors of very different nature. Research done in this direction includes fusing the estimated quality measures to obtain a single measure with higher prediction capability of the segmentation and matching accuracy. On-going research also includes exploiting the different sensitivity observed in the two matchers, so by using adaptive quality fusion schemes, we are seeking to obtain better performance over a wide range of qualities.

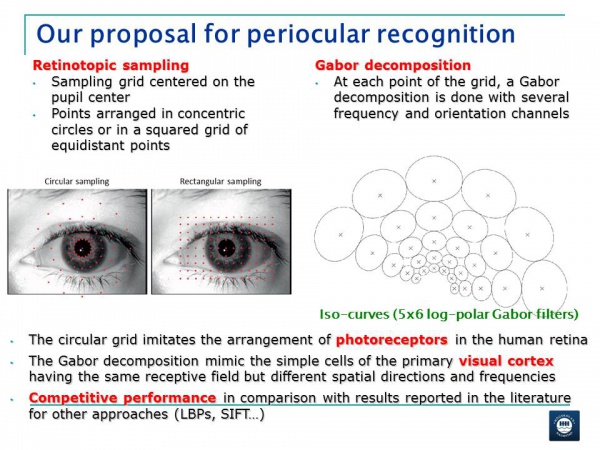

Personal recognition based on periocular information

Periocular recognition is a direction that has gained attention recently in biometrics. Periocular refers to the face region in the vicinity of the eye, including the eye, eyelids, lashes and eyebrows. This region can be easily obtained with existing setups for face and iris, and the requirement of user cooperation can be relaxed. An evident advantage of the periocular region is its availability over a wide range of acquisition distances even when the iris texture cannot be reliably obtained (low resolution, off-angle, etc.) or under partial face occlusion (close distances). Most face recognition systems use a holistic approach, requiring a full face image, so the performance is affected in case of occlusion. In addition, current commercial iris systems have constrained image acquisition conditions, prompting the user to position the eye in front of the sensor (normally at ~ 20-40 cm). In the current context of ubiquitous access to information, with the proliferation of portable devices and mobility requirements, the relaxation of acquisition constraints is a factor that have great (if not the greatest) impact in mass acceptance levels of these technologies. Other application fields that are receiving much attention are forensics and surveillance, where the requirement of controlled acquisition is simply impossible. These considerations of a more flexible and less intrusive acquisition are central for the objectives of this project, and therefore periocular work is of high relevance.

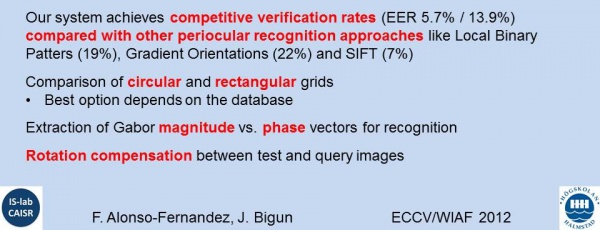

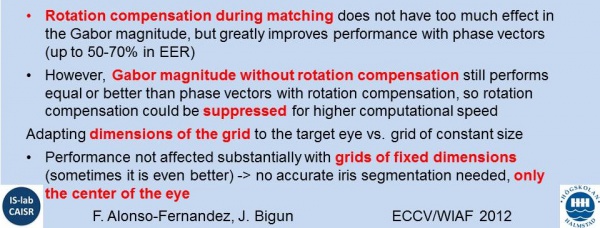

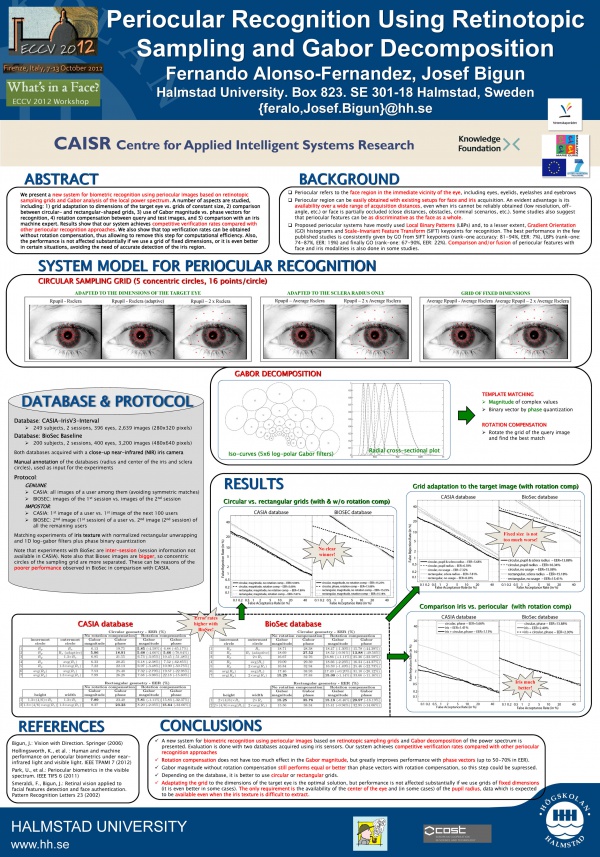

Work in this direction has consisted in the development of a periocular recognition system based on retinotopic sampling grids positioned in the pupil center, followed by Gabor decomposition at different frequencies and orientations. Our system achieves competitive verification rates compared with existing recognition approaches, based mainly on Local Binary Patterns and Gradient Orientation histograms. We have also carried out experiments which demonstrate the validity of our recognition system in less-controlled images. For example, it does not need an accurate detection of the iris region, but it is enough with having the center of the eye. This makes this system useful in a wide range of applications where the iris texture cannot be obtained, and provides the way to integrate it with the detection system of eye regions developed in the previous sub-objective.

The periocular recognition system is described in publications [3] and [4] below. The following are slides from the conference presentations and the poster presenting publication [4]:

Multibiometric Fusion

The periocular system has been also compared with a dedicated iris expert based on a popular public software which uses 1D log-Gabor filters. The dedicated iris expert works much better than the proposed periocular approach, something that encourages us to continue the improvement of our system. The fusion of iris and periocular systems also shows a relative improvement in some of our experiments. This points out the potential complementarity of the iris texture and the periocular region, as reported in some other works in the literature. Fusion between the systems is done using adaptive logistic regression, which has shown superior performance than other fusion rules in previous research work of the fellow.

The fusion is evaluated periocular recognition system is described in publication [4] below.

Existing face recognition systems are based on a global model of the face, thus failing if the whole face is not available. We are also working in the fusion of periocular information with other local face regions, where the identity model of the person is built from the available regions. This is the approach being followed in the literature to cope with incomplete faces.

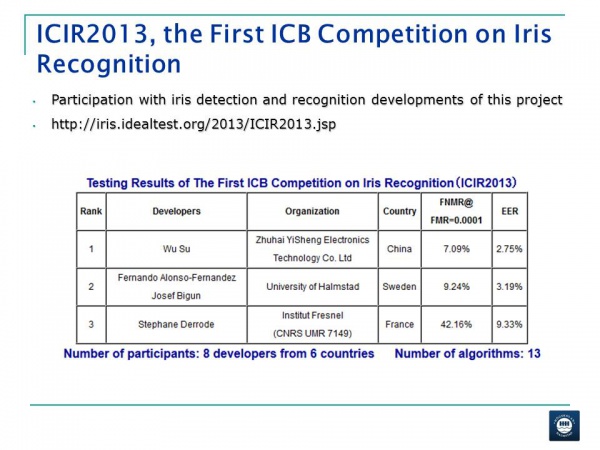

ICIR2013, the First ICB Competition on Iris Recognition

We have participated with developments of this project in ICIR2013, the First ICB Competition on Iris Recognition. This competition has been organized by the Institute of Automation, Chinese Academy of Sciences (CASIA), in conjunction with the 6th International Conference on Biometrics (ICB-2013). The objective was to evaluate iris recognition algorithms on data including motion blur, non-linear deformation, eyeglasses and specular reflections. We participated with an iris segmentation stage based on the GST and an iris matching stage based on the fusion of retinotopic sampling grids and SIFT keypoints. The submission obtained excellent results, ranked in second position out of 13 participating systems.

Our submission to ICIR2013 is briefly described in the report [1] below. The following is a slide with a snapshot from the website of the competition.

References

- F. Alonso-Fernandez, J. Bigun, “Halmstad University submission to the First ICB Competition on Iris Recognition (ICIR2013)”, Technical Report, Halmstad University, August 2013 (link)

- F. Alonso-Fernandez, J. Bigun, “Quality Factors Affecting Iris Segmentation and Matching”, Proc. 6th IAPR Intl Conf on Biometrics, ICB, Madrid, June 4-7, 2013 (link)

- F. Alonso-Fernandez, J. Bigun, “Biometric Recognition Using Periocular Images”, Proc. Swedish Symposium on Image Analysis, SSBA (Goteborg, Sweden), March 14-15 2013 (link)

- F. Alonso-Fernandez, J. Bigun, “Periocular Recognition Using Retinotopic Sampling and Gabor Decomposition”, Proc. Intl Workshop “What's in a Face?” WIAF, in conjunction with the European Conference on Computer Vision, ECCV, Springer LNCS-7584, pp. 309-318, Firenze, Italy, October 7-13, 2012 (link)

- F. Alonso-Fernandez, J. Bigun, “Iris Boundaries Segmentation Using the Generalized Structure Tensor. A Study on the Effects on Image Degradation”, Proc. Intl Conf on Biometrics: Theory, Apps and Systems, BTAS, Washington DC, September 23-26, 2012 (link)

- F. Alonso-Fernandez, J. Bigun, “Iris Segmentation Using the Generalized Structure Tensor”, Proc. Swedish Symposium on Image Analysis, SSBA (Stockholm, Sweden), March 7-9 2012 (link)